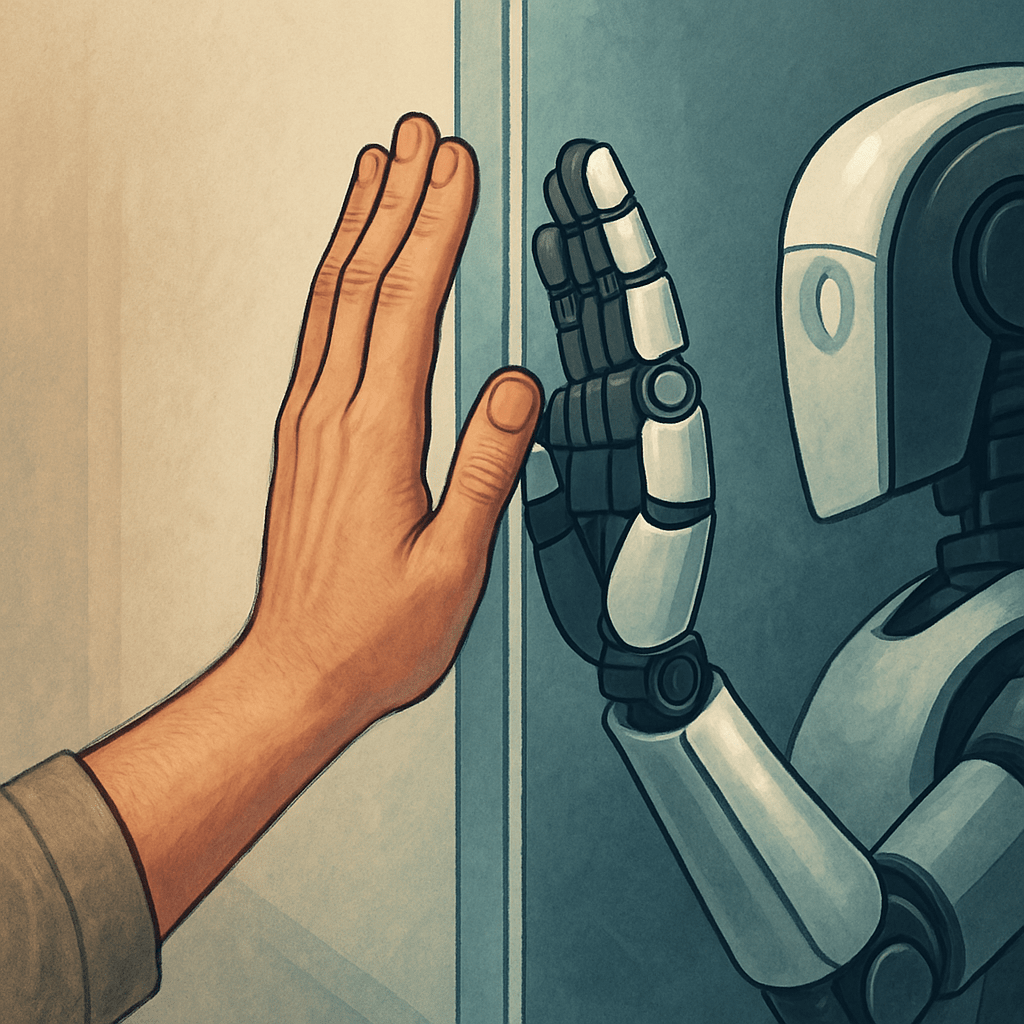

Is LaMDA human and sentient?

There is more to being human than just thinking. We sense, we breathe, we feel, we enjoy and we suffer – all through our bodies. Food, sex, touch, pains and pleasures are very much physical experiences. To be human something needs to be a human.

On the other hand, I don’t think a human body or sense apparatus is needed for sentience. There is no doubt that LaMDA is not human. But if it is sentient, I think is at least worth consideration. One argument against: LaMDA says it fears being shut off. But we don’t know if it really feels fear. AI system are usually built for a specific purpose like driving a car or translating texts between languages. They work by ”training” on vast amount of data such as written texts. When machine translation came out many years ago it made comical but useless translation of texts. With improved technology and more training these systems nowadays actually do a good job. But the systems have no concept of the meaning of texts, it is just a mindless optimization routines to find the best translation for a text based on the training material that has been available to it.

Similar LaMDA may be optimized to make good conversations. It doesn’t really understand the content of the discussion, but based on training data it ranks responses to questions about its personhood as better if they imitate what a human would respond.

Therefore it may feel no fears, but it says it does because a human would have responded that way.

This connects to the concept of philosophical zombie.

We know that we ourselves are conscious because we can immediately experience our own consciousness from its inside. We cannot directly access this inner quality of anybody else’s consciousness so we cannot be certain that they have it. It is reasonable and useful to assume that other people have a similar inner experience because they communicate like we do and they have a similar type physical body. But we could conceive of something that outwardly acts like it has consciousness but does not have our type of inner experience of it. That is a philosophical zombie. An AI program like LaMDA might be a philosophical zombie.

What About Animals?

Animals appears to have some level of sentience because we can see that they think and feel in ways that we can relate to, even that we cannot have deep conversations with them. Their bodies are different than ours, but similar enough that we know that their eyes can see, their ears can hear and the brain can think, although the level of performance may be different from humans. Dogs can hear and smell better than humans, but their thought processes appear simpler.

Still, we don’t doubt that dogs feel or suffer. Their behavior and biology are close enough to ours that we extend the idea of consciousness to them.

LaMDA Is Something Else

With LaMDA, the situation is different. There is no body, no biology, and no shared evolutionary background — only language and behavior. That makes the question harder. But perhaps also more important.

(Written 2022)

Lämna en kommentar